#supervised ai

Explore tagged Tumblr posts

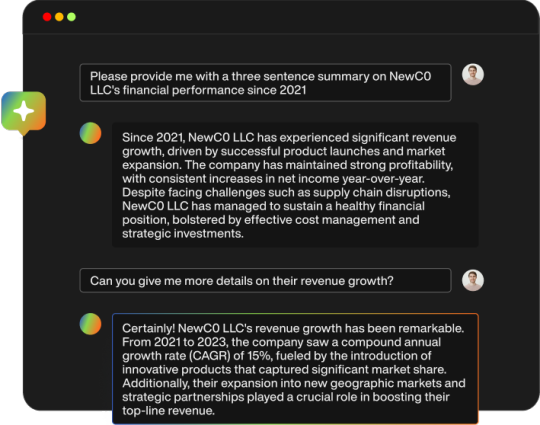

Text

Humans are not perfectly vigilant

I'm on tour with my new, nationally bestselling novel The Bezzle! Catch me in BOSTON with Randall "XKCD" Munroe (Apr 11), then PROVIDENCE (Apr 12), and beyond!

Here's a fun AI story: a security researcher noticed that large companies' AI-authored source-code repeatedly referenced a nonexistent library (an AI "hallucination"), so he created a (defanged) malicious library with that name and uploaded it, and thousands of developers automatically downloaded and incorporated it as they compiled the code:

https://www.theregister.com/2024/03/28/ai_bots_hallucinate_software_packages/

These "hallucinations" are a stubbornly persistent feature of large language models, because these models only give the illusion of understanding; in reality, they are just sophisticated forms of autocomplete, drawing on huge databases to make shrewd (but reliably fallible) guesses about which word comes next:

https://dl.acm.org/doi/10.1145/3442188.3445922

Guessing the next word without understanding the meaning of the resulting sentence makes unsupervised LLMs unsuitable for high-stakes tasks. The whole AI bubble is based on convincing investors that one or more of the following is true:

There are low-stakes, high-value tasks that will recoup the massive costs of AI training and operation;

There are high-stakes, high-value tasks that can be made cheaper by adding an AI to a human operator;

Adding more training data to an AI will make it stop hallucinating, so that it can take over high-stakes, high-value tasks without a "human in the loop."

These are dubious propositions. There's a universe of low-stakes, low-value tasks – political disinformation, spam, fraud, academic cheating, nonconsensual porn, dialog for video-game NPCs – but none of them seem likely to generate enough revenue for AI companies to justify the billions spent on models, nor the trillions in valuation attributed to AI companies:

https://locusmag.com/2023/12/commentary-cory-doctorow-what-kind-of-bubble-is-ai/

The proposition that increasing training data will decrease hallucinations is hotly contested among AI practitioners. I confess that I don't know enough about AI to evaluate opposing sides' claims, but even if you stipulate that adding lots of human-generated training data will make the software a better guesser, there's a serious problem. All those low-value, low-stakes applications are flooding the internet with botshit. After all, the one thing AI is unarguably very good at is producing bullshit at scale. As the web becomes an anaerobic lagoon for botshit, the quantum of human-generated "content" in any internet core sample is dwindling to homeopathic levels:

https://pluralistic.net/2024/03/14/inhuman-centipede/#enshittibottification

This means that adding another order of magnitude more training data to AI won't just add massive computational expense – the data will be many orders of magnitude more expensive to acquire, even without factoring in the additional liability arising from new legal theories about scraping:

https://pluralistic.net/2023/09/17/how-to-think-about-scraping/

That leaves us with "humans in the loop" – the idea that an AI's business model is selling software to businesses that will pair it with human operators who will closely scrutinize the code's guesses. There's a version of this that sounds plausible – the one in which the human operator is in charge, and the AI acts as an eternally vigilant "sanity check" on the human's activities.

For example, my car has a system that notices when I activate my blinker while there's another car in my blind-spot. I'm pretty consistent about checking my blind spot, but I'm also a fallible human and there've been a couple times where the alert saved me from making a potentially dangerous maneuver. As disciplined as I am, I'm also sometimes forgetful about turning off lights, or waking up in time for work, or remembering someone's phone number (or birthday). I like having an automated system that does the robotically perfect trick of never forgetting something important.

There's a name for this in automation circles: a "centaur." I'm the human head, and I've fused with a powerful robot body that supports me, doing things that humans are innately bad at.

That's the good kind of automation, and we all benefit from it. But it only takes a small twist to turn this good automation into a nightmare. I'm speaking here of the reverse-centaur: automation in which the computer is in charge, bossing a human around so it can get its job done. Think of Amazon warehouse workers, who wear haptic bracelets and are continuously observed by AI cameras as autonomous shelves shuttle in front of them and demand that they pick and pack items at a pace that destroys their bodies and drives them mad:

https://pluralistic.net/2022/04/17/revenge-of-the-chickenized-reverse-centaurs/

Automation centaurs are great: they relieve humans of drudgework and let them focus on the creative and satisfying parts of their jobs. That's how AI-assisted coding is pitched: rather than looking up tricky syntax and other tedious programming tasks, an AI "co-pilot" is billed as freeing up its human "pilot" to focus on the creative puzzle-solving that makes coding so satisfying.

But an hallucinating AI is a terrible co-pilot. It's just good enough to get the job done much of the time, but it also sneakily inserts booby-traps that are statistically guaranteed to look as plausible as the good code (that's what a next-word-guessing program does: guesses the statistically most likely word).

This turns AI-"assisted" coders into reverse centaurs. The AI can churn out code at superhuman speed, and you, the human in the loop, must maintain perfect vigilance and attention as you review that code, spotting the cleverly disguised hooks for malicious code that the AI can't be prevented from inserting into its code. As "Lena" writes, "code review [is] difficult relative to writing new code":

https://twitter.com/qntm/status/1773779967521780169

Why is that? "Passively reading someone else's code just doesn't engage my brain in the same way. It's harder to do properly":

https://twitter.com/qntm/status/1773780355708764665

There's a name for this phenomenon: "automation blindness." Humans are just not equipped for eternal vigilance. We get good at spotting patterns that occur frequently – so good that we miss the anomalies. That's why TSA agents are so good at spotting harmless shampoo bottles on X-rays, even as they miss nearly every gun and bomb that a red team smuggles through their checkpoints:

https://pluralistic.net/2023/08/23/automation-blindness/#humans-in-the-loop

"Lena"'s thread points out that this is as true for AI-assisted driving as it is for AI-assisted coding: "self-driving cars replace the experience of driving with the experience of being a driving instructor":

https://twitter.com/qntm/status/1773841546753831283

In other words, they turn you into a reverse-centaur. Whereas my blind-spot double-checking robot allows me to make maneuvers at human speed and points out the things I've missed, a "supervised" self-driving car makes maneuvers at a computer's frantic pace, and demands that its human supervisor tirelessly and perfectly assesses each of those maneuvers. No wonder Cruise's murderous "self-driving" taxis replaced each low-waged driver with 1.5 high-waged technical robot supervisors:

https://pluralistic.net/2024/01/11/robots-stole-my-jerb/#computer-says-no

AI radiology programs are said to be able to spot cancerous masses that human radiologists miss. A centaur-based AI-assisted radiology program would keep the same number of radiologists in the field, but they would get less done: every time they assessed an X-ray, the AI would give them a second opinion. If the human and the AI disagreed, the human would go back and re-assess the X-ray. We'd get better radiology, at a higher price (the price of the AI software, plus the additional hours the radiologist would work).

But back to making the AI bubble pay off: for AI to pay off, the human in the loop has to reduce the costs of the business buying an AI. No one who invests in an AI company believes that their returns will come from business customers to agree to increase their costs. The AI can't do your job, but the AI salesman can convince your boss to fire you and replace you with an AI anyway – that pitch is the most successful form of AI disinformation in the world.

An AI that "hallucinates" bad advice to fliers can't replace human customer service reps, but airlines are firing reps and replacing them with chatbots:

https://www.bbc.com/travel/article/20240222-air-canada-chatbot-misinformation-what-travellers-should-know

An AI that "hallucinates" bad legal advice to New Yorkers can't replace city services, but Mayor Adams still tells New Yorkers to get their legal advice from his chatbots:

https://arstechnica.com/ai/2024/03/nycs-government-chatbot-is-lying-about-city-laws-and-regulations/

The only reason bosses want to buy robots is to fire humans and lower their costs. That's why "AI art" is such a pisser. There are plenty of harmless ways to automate art production with software – everything from a "healing brush" in Photoshop to deepfake tools that let a video-editor alter the eye-lines of all the extras in a scene to shift the focus. A graphic novelist who models a room in The Sims and then moves the camera around to get traceable geometry for different angles is a centaur – they are genuinely offloading some finicky drudgework onto a robot that is perfectly attentive and vigilant.

But the pitch from "AI art" companies is "fire your graphic artists and replace them with botshit." They're pitching a world where the robots get to do all the creative stuff (badly) and humans have to work at robotic pace, with robotic vigilance, in order to catch the mistakes that the robots make at superhuman speed.

Reverse centaurism is brutal. That's not news: Charlie Chaplin documented the problems of reverse centaurs nearly 100 years ago:

https://en.wikipedia.org/wiki/Modern_Times_(film)

As ever, the problem with a gadget isn't what it does: it's who it does it for and who it does it to. There are plenty of benefits from being a centaur – lots of ways that automation can help workers. But the only path to AI profitability lies in reverse centaurs, automation that turns the human in the loop into the crumple-zone for a robot:

https://estsjournal.org/index.php/ests/article/view/260

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/04/01/human-in-the-loop/#monkey-in-the-middle

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

--

Jorge Royan (modified) https://commons.wikimedia.org/wiki/File:Munich_-_Two_boys_playing_in_a_park_-_7328.jpg

CC BY-SA 3.0 https://creativecommons.org/licenses/by-sa/3.0/deed.en

--

Noah Wulf (modified) https://commons.m.wikimedia.org/wiki/File:Thunderbirds_at_Attention_Next_to_Thunderbird_1_-_Aviation_Nation_2019.jpg

CC BY-SA 4.0 https://creativecommons.org/licenses/by-sa/4.0/deed.en

#pluralistic#ai#supervised ai#humans in the loop#coding assistance#ai art#fully automated luxury communism#labor

379 notes

·

View notes

Text

The Lake House was underwater. The Marmonts' ambitions had finally been realized. Jules had dangled the painting like a lure and hooked an ocean. Their machines couldn't hold it. A fault in the system. The water rushed in. Filled it until it was ready to burst. All their successes and failures had led them here. The work had made them into monsters. But the Lake House was still theirs. It always would be. They could feel an outsider trespassing in their labs. "I know you're here." they called to the dark. They hunted, floor by floor. They wouldn't let the intruder stop their progress. This was their home. Their beloved tomb beneath the waves.

#alan wake 2#the lake house#remedy entertainment#remedyverse#remedy said hey you all know that couple in your social circle that should at the absolute minimum get divorced#now imagine if they were also extremely unethical government scientists operating without the supervision of a review board#and they despised the unquantifiable nature of art and its relationship to the artist#and also one of them used AI#truly the worst possible FBC personnel to be sent out to cauldron lake#i want to see them tear each other's throats out like rabid dogs#jules marmont#diana marmont

99 notes

·

View notes

Text

this is a very dumb headline for what is actually a nice little meditation on the value of writing. nothing especially groundbreaking here esp for people who are already anti-generative AI in education but i want to save it as it might be interesting to teach a piece like this alongside a more pro-AI piece sometime.

#i am trying to get better at distinguishing between productive and unproductive (in my mind) uses of AI#my understanding is still so rudimentary but#it seems like in the sciences it can be extremely valuable to have tools that help you crunch these massive datasets#or find new combinations of things that enable them to unlock big questions or challenges#and i am sure that in other fields AI can automate extremely mindnumbing tasks that do not enrich the human mind#and do not particularly benefit from having a human mind supervise them#but writing... like... what are we doing here

4 notes

·

View notes

Text

That's because it wasn't used as evidence per se. It was used in a pre-sentencing victim impact statement in a very misguided way of processing grief in the surviving family of the deceased.

It's one thing to use AI generated voices for yourself in a private setting. It's another to use such things in ways that have very real consequences for other people.

This needs to be banned from courtrooms posthaste.

We live in actual hell

#anti ai#this is a new level of ghoulishness that i am happy the DEFENCE LAWYER of all people appreciated#the one saving grace of all this is if it gives the convicted nightmares for the next while#but it's still not something we should be seeing used in court except under very narrowly defined circumstances#such as the use of pattern matching under close supervision by humans or the like

39K notes

·

View notes

Text

some facts about robert prevost (leo xiv) that i think are important to know:

while he was born in chicago, he has spent the vast majority of his life outside of america. he went to rome at a young age, then spent most of his priesthood in peru

pope leo xiii was well known for his interest in social justice -- the fact that prevost chose this name may show that he also nurses an interest

he was one of pope francis' closest advisors

he's described as being balanced in terms of his outlook, but has progressive views on some specific issues, including migrants and poverty

he is relatively young -- we will probably have pope leo xiv for a long time

quote from CBS article: "While Prevost is seen overall as a centrist, on some key social issues he's viewed as progressive. He has long embraced marginalized groups, a lot like Francis, who championed migrants and the poor."

another quote: "Cardinal George of Chicago, of happy memory, was one of my great mentors, and he said: 'Look, until America goes into political decline, there won't be an American pope.' And his point was, if America is kind of running the world politically, culturally, economically, they don't want America running the world religiously. So, I think there's some truth to that, that we're such a superpower and so dominant, they don't wanna give us, also, control over the church." -Robert Barron, bishop of a diocese in Minnesota

so while it does leave a bad taste in the mouth to have an american pope at this time, he is definitely not the kind of pope trump will like, nor will the conservative base. while he probably won't catapult the church into a lot of uncharted territory, he does look as if he will at the very least continue and support the work francis laid the groundwork for

additional information:

apparently he is involved in sexual assault coverups -- not fantastic, but to be honest the entire catholic church is so incredibly guilty of this it's not surprising

robert prevost has tweeted five times since joining twitter. one of those tweets was telling jd vance he does not understand love

updating information: "He didn't cover up those cases though. It seems like he opened the investigation in the case of the two women who were abused and encouraged them to go to the police, and then the investigation was closed by someone higher up than him afterwards. With the priest who abused kids, yes he let the abuser live at the priory—under supervision, which given that abusers have to live SOMEWHERE I'm glad that it was somewhere he was being observed. (In any case when the USCCB revised the rules two years later to be stricter, the abuser was moved somewhere else; Prevost was just following regulations as they existed at the time.) As for the accusations Sodalitum has made against him, Sodalitum themselves were dissolved last year for having a shitton of sexual abuse going on in their group, and since Prevost was part of shutting them down they hate his guts; any accusations they've made against him are extremely sus at best." this information seems reliable, but needs evidence attached to it. it is public knowledge that Sodalitum were dissolved (by Pope Francis).

even more information:

robert prevost was a high-ranking augustinian -- this order is notoriously pro-immigrant, pro-environment, and anti-materialism to the point of criticising capitalism

i already mentioned that the previous pope leo was something of a social activist. specifically, pope leo xiii specifically championed worker's rights

update: since taking the papal seat leo xiv (prevost) has specifically called out ai as a threat to the world and its workers, comparing leo xiii’s campaign for laborers to his own dedication to addressing this growing concern

8K notes

·

View notes

Text

youtube

1 note

·

View note

Text

Bridging the AI-Human Gap: How Reinforcement Learning from Human Feedback (RLHF) is Revolutionizing Smarter Machines

Imagine training a brilliant student who aces every exam but still struggles to navigate real-world conversations. This is the paradox of traditional artificial intelligence: models can process data at lightning speed, yet often fail to align with human intuition, ethics, or nuance. The solution? Reinforcement Learning from Human Feedback (RLHF.)

What is RLHF? (And Why Should You Care?)

Reinforcement Learning from Human Feedback (RLHF) is a hybrid training method where AI models learn not just from raw data, but from human-guided feedback. Think of it like teaching a child: instead of memorizing textbooks, the child learns by trying, making mistakes, and adapting based on a teacher’s corrections. Here’s how it works in practice:

Initial Training: An AI model learns from a dataset (e.g., customer service logs).

Human Feedback Loop: Humans evaluate the model’s outputs, ranking responses as “helpful,��� “irrelevant,” or “harmful.”

Iterative Refinement: The model adjusts its behavior to prioritize human-preferred outcomes.

Why it matters:

Reduces AI bias by incorporating ethical human judgment.

Creates systems that adapt to cultural, linguistic, and situational nuances.

Builds trust with end-users through relatable, context-aware interactions.

RLHF in Action: Real-World Wins 1. Smarter Chatbots That Actually Solve Problems Generic chatbots often frustrate users with scripted replies. RLHF changes this. For example, a healthcare company used RLHF to train a support bot using feedback from doctors and patients. The result? A 50% drop in escalations to human agents, as the bot learned to prioritize empathetic, medically accurate responses. 2. Content Moderation Without the Blind Spots Social platforms struggle to balance free speech and safety. RLHF-trained models can flag harmful content more accurately by learning from moderators’ nuanced decisions. One platform reduced false positives by 30% after integrating human feedback on context (e.g., distinguishing satire from hate speech). 3. Personalized Recommendations That Feel Human Streaming services using RLHF don’t just suggest content based on your watch history—they adapt to your mood.

The Hidden Challenges of RLHF (And How to Solve Them) While RLHF is powerful, it’s not plug-and-play. Common pitfalls include:

Feedback Bias: If human evaluators lack diversity, models inherit their blind spots.

Scalability: Collecting high-quality feedback at scale is resource-intensive.

Overfitting: Models may become too tailored to specific groups, losing global applicability.

The Fix? Partner with experts who specialize in RLHF infrastructure. Companies like Apex Data Sciences design custom feedback pipelines, source diverse human evaluators, and balance precision with scalability

Conclusion: Ready to Humanize Your AI?

RLHF isn’t just a technical upgrade it’s a philosophical shift. It acknowledges that the “perfect” AI isn’t the one with the highest accuracy score, but the one that resonates with the people it serves. If you’re building AI systems that need to understand as well as compute, explore how Apex Data Sciences’ RLHF services can help. Their end-to-end solutions ensure your models learn not just from data, but from the human experiences that data represents.

#RLHF#RLHF Services#AI#Reinforcement Learning from Human Feedback#Content Moderation#Supervised Fine-Tuning#SFT#SFT Solutions#RLHF & SFT Solutions

0 notes

Text

i also asked for animals in swedish.

chatgpt got the swedish translations right, but then dalle3 took its instructions and got a bit carried away with the umlauts

learn the mammals with the help of dalle-3!

more

#chatgpt#dalle3#ai generated#mammal#swedish#excessive umlauts#it is really irresponsible to allow an ai to wield a quintuple umlaut#those should only be used under careful human supervision

1K notes

·

View notes

Text

Dal Pre-training all'Expert Iteration: Il Percorso verso la Riproduzione di OpenAI Five

Il Reinforcement Learning (RL) rappresenta un approccio distintivo nel panorama del machine learning, basato sull’interazione continua tra un agente e il suo ambiente. In RL, l’agente apprende attraverso un ciclo di azioni e ricompense, con l’obiettivo di massimizzare il guadagno cumulativo a lungo termine. Questa strategia lo differenzia dagli approcci tradizionali come l’apprendimento…

#ai#AI-development#AI-research#artificial-intelligence#beam-search#computational-resources#deep-learning#expert-iteration#fine-tuning#machine-learning#MCTS#open-source-AI#OpenAI-Five#policy-initialization#PPO#pre-training#reinforcement-learning#reward-design#sequential-revisions#supervised-learning

0 notes

Text

mGPS-An invisible map on your skin

Ever got confused about whether you have to exit from the next diversion or not, while using Google Maps? A trivial problem, owing to the accuracy of GPS (Global Positioning System). Speaking about accuracy, we now have mGPS (Microbiome Geographic Population Structure). At first, the name seems like biology, geography and demography combined into one, but it’s not that complex. It is an AI tool which can track which place you have recently visited, be it a beach or a city centre.

The researchers found that when you touch a particular surface like a tree in the woods, you pick up bacteria that is unique to that area, advocating that unique populations of bacteria exist in different locations. This phenomenon of the unique existence of microorganisms is called microbiome. Eran Elhaik, who led the study stated that they analyzed extensive datasets of microbiome samples from urban environments, soil and marine ecosystems and trained the AI model to identify unique proportions of it and link them to specific locations. Simply put, it’s like analyzing the unique blend of spices in a dish to figure out which country or region it came from. The analyzing bit is done by the AI tool, which already knows which spices belong to which region as the data has been fed into it.

The research team gathered a vast collection of microbiome samples, including 4135 samples from public transit systems in 53 cities, 237 soil samples from 18 countries, and 131 marine samples from nine different water bodies. The tool was successful at identifying the city source for 92% of the urban samples.

But how was the tool trained anyway, or how are any of the AI tools trained?

First thing first- Data collection and labelling. Both go hand-in-hand, for example, the collected microbiome samples must have been labelled with their geographic origin like country or environment type (urban, soil, marine). Then, after cleaning, the data is fed to the model. The model selection part is also a crucial one. An advanced model like a supervised machine learning model or a deep learning model must have been chosen. To improve the efficiency of the tool, it will be trained continuously on the labelled data of different samples with unique compositions.

What’s even more interesting is its application-

Imagine the cops arresting a set of suspects and running their microorganism orientation through the AI tool to know where they were on the night of the crime, or, the AI tool accurately identifying the location from where a particular virus was picked up and eliminating it from the source itself. By analyzing artefacts through mGPS, archaeologists would be able to track human migration accurately.

Technologies like mGPS are not difficult to build, at least in theory. Execution might be an issue due to the collection and feeding of vast amounts of samples. However, with collaboration and support, it can be executed, and with ease as well. Ultimately, it comes down to the extent of your imagination.

You can build it if you can imagine it.

#ai#ai tools#bacteria#microbiome#microbiology#science#technology#microorganisms#ai model#supervision#supervisedlearning#deeplearning#imagination

0 notes

Text

Discover Self-Supervised Learning for LLMs

Artificial intelligence is transforming the world at an unprecedented pace, and at the heart of this revolution lies a powerful learning technique: self-supervised learning. Unlike traditional methods that demand painstaking human effort to label data, self-supervised learning flips the script, allowing AI models to teach themselves from the vast oceans of unlabeled data that exist today. This method has rapidly emerged as the cornerstone for training Large Language Models (LLMs), powering applications from virtual assistants to creative content generation. It drives a fundamental shift in our thinking about AI's societal role.

Self-supervised learning propels LLMs to new heights by enabling them to learn directly from the data—no external guidance is needed. It's a simple yet profoundly effective concept: train a model to predict missing parts of the data, like guessing the next word in a sentence. But beneath this simplicity lies immense potential. This process enables AI to capture the depth and complexity of human language, grasp the context, understand the meaning, and even accumulate world knowledge. Today, this capability underpins everything from chatbots that respond in real time to personalized learning tools that adapt to users' needs.

This approach's advantages go far beyond just efficiency. By tapping into a virtually limitless supply of data, self-supervised learning allows LLMs to scale massively, processing billions of parameters and honing their ability to understand and generate human-like text. It democratizes access to AI, making it cheaper and more flexible and pushing the boundaries of what these models can achieve. And with the advent of even more sophisticated strategies like autonomous learning, where models continually refine their understanding without external input, the potential applications are limitless. We will try to understand how self-supervised learning works, its benefits for LLMs, and the profound impact it is already having on AI applications today. From boosting language comprehension to cutting costs and making AI more accessible, the advantages are clear and they're just the beginning. As we stand on the brink of further advancements, self-supervised learning is set to redefine the landscape of artificial intelligence, making it more capable, adaptive, and intelligent than ever before.

Understanding Self-Supervised Learning

Self-supervised learning is a groundbreaking approach that has redefined how large language models (LLMs) are trained, going beyond the boundaries of AI. We are trying to understand what self-supervised learning entails, how it differs from other learning methods, and why it has become the preferred choice for training LLMs.

Definition and Differentiation

At its core, self-supervised learning is a machine learning paradigm where models learn from raw, unlabeled data by generating their labels. Unlike supervised learning, which relies on human-labeled data, or unsupervised learning, which searches for hidden patterns in data without guidance, self-supervised learning creates supervisory signals from the data.

For example, a self-supervised learning model might take a sentence like "The cat sat on the mat" and mask out the word "mat." The model's task is to predict the missing word based on the context provided by the rest of the sentence. This way, we can get the model to learn the rules of grammar, syntax, and context without requiring explicit annotations from humans.

Core Mechanism: Next-Token Prediction

A fundamental aspect of self-supervised learning for LLMs is next-token prediction, a task in which the model anticipates the next word based on the preceding words. While this may sound simple, it is remarkably effective in teaching a model about the complexities of human language.

Here's why next-token prediction is so powerful:

Grammar and Syntax

To predict the next word accurately, the model must learn the rules that govern sentence structure. For example, after seeing different types of sentences, the model understands that "The cat" is likely to be followed by a verb like "sat" or "ran."

Semantics

The model is trained to understand the meanings of words and their relationships with each other. For example, if you want to say, "The cat chased the mouse," the model might predict "mouse" because it understands the words "cat" and "chased" are often used with "mouse."

Context

Effective prediction requires understanding the broader context. In a sentence like "In the winter, the cat sat on the," the model might predict "rug" or "sofa" instead of "grass" or "beach," recognizing that "winter" suggests an indoor setting.

World Knowledge

Over time, as the model processes vast amounts of text, it accumulates knowledge about the world, making more informed predictions based on real-world facts and relationships. This simple yet powerful task forms the basis of most modern LLMs, such as GPT-3 and GPT-4, allowing them to generate human-like text, understand context, and perform various language-related tasks with high proficiency .

The Transformer Architecture

Self-supervised learning for LLMs relies heavily on theTransformer architecture, a neural network design introduced in 2017 that has since become the foundation for most state-of-the-art language models. The Transformer Architecture is great for processing sequential data, like text, because it employs a mechanism known as attention. Here's how it works:

Attention Mechanism

Instead of processing text sequentially, like traditional recurrent neural networks (RNNs), Transformers use an attention mechanism to weigh the importance of each word in a sentence relative to every other word. The model can focus on the most relevant aspects of the text, even if they are far apart. For example, in the sentence "The cat that chased the mouse is on the mat," the model can pay attention to both "cat" and "chased" while predicting the next word.

Parallel Processing

Unlike RNNs, which process words one at a time, Transformers can analyze entire sentences in parallel. This makes them much faster and more efficient, especially when dealing large datasets. This efficiency is critical when training on datasets containing billions of words.

Scalability

The Transformer's ability to handle vast amounts of data and scale to billions of parameters makes it ideal for training LLMs. As models get larger and more complex, the attention mechanism ensures they can still capture intricate patterns and relationships in the data.

By leveraging the Transformer architecture, LLMs trained with self-supervised learning can learn from context-rich datasets with unparalleled efficiency, making them highly effective at understanding and generating language.

Why Self-Supervised Learning?

The appeal of self-supervised learning lies in its ability to harness vast amounts of unlabeled text data. Here are some reasons why this method is particularly effective for LLMs:

Utilization of Unlabeled Data

Self-supervised learning uses massive amounts of freely available text data, such as web pages, books, articles, and social media posts. This approach eliminates costly and time-consuming human annotation, allowing for more scalable and cost-effective model training.

Learning from Context

Because the model learns by predicting masked parts of the data, it naturally develops an understanding of context, which is crucial for generating coherent and relevant text. This makes LLMs trained with self-supervised learning well-suited for tasks like translation, summarization, and content generation.

Self-supervised learning enables models to continuously improve as they process more data, refining their understanding and capabilities. This dynamic adaptability is a significant advantage over traditional models, which often require retraining from scratch to handle new tasks or data.

In summary, self-supervised learning has become a game-changing approach for training LLMs, offering a powerful way to develop sophisticated models that understand and generate human language. By leveraging the Transformer architecture and utilizing vast amounts of unlabeled data, this method equips LLMs that can perform a lot of tasks with remarkable proficiency, setting the stage for future even more advanced AI applications.

Key Benefits of Self-Supervised Learning for LLMs

Self-supervised learning has fundamentally reshaped the landscape of AI, particularly in training large language models (LLMs). Concretely, what are the primary benefits of this approach, which is to enhance LLMs' capabilities and performance?

Leverage of Massive Unlabeled Data

One of the most transformative aspects of self-supervised learning is its ability to utilize vast amounts of unlabeled data. Traditional machine learning methods rely on manually labeled datasets, which are expensive and time-consuming. In contrast, self-supervised learning enables LLMs to learn from the enormous quantities of online text—web pages, books, articles, social media, and more.

By tapping into these diverse sources, LLMs can learn language structures, grammar, and context on an unprecedented scale. This capability is particularly beneficial because: Self-supervised learning draws from varied textual sources, encompassing multiple languages, dialects, topics, and styles. This diversity allows LLMs to develop a richer, more nuanced understanding of language and context, which would be impossible with smaller, hand-labeled datasets. The self-supervised learning paradigm scales effortlessly to massive datasets containing billions or even trillions of words. This scale allows LLMs to build a comprehensive knowledge base, learning everything from common phrases to rare idioms, technical jargon, and even emerging slang without manual annotation.

Improved Language Understanding

Self-supervised learning significantly enhances an LLM's ability to understand and generate human-like text. LLMs trained with self-supervised learning can develop a deep understanding of language structures, semantics, and context by predicting the next word or token in a sequence.

Deeper Grasp of Grammar and Syntax

LLMs implicitly learn grammar rules and syntactic structures through repetitive exposure to language patterns. This capability allows them to construct sentences that are not only grammatically correct but also contextually appropriate.

Contextual Awareness

Self-supervised learning teaches LLMs to consider the broader context of a passage. When predicting a word in a sentence, the model doesnt just look at the immediately preceding words but considers th'e entire sentence or even the paragraph. This context awareness is crucial for generating coherent and contextually relevant text.

Learning World Knowledge

LLMs process massive datasets and accumulate factual knowledge about the world. This helps them make informed predictions, generate accurate content, and even engage in reasoning tasks, making them more reliable for applications like customer support, content creation, and more.

Scalability and Cost-Effectiveness

The cost-effectiveness of self-supervised learning is another major benefit. Traditional supervised learning requires vast amounts of labeled data, which can be expensive. In contrast, self-supervised learning bypasses the need for labeled data by using naturally occurring structures within the data itself.

Self-supervised learning dramatically cuts costs by eliminating the reliance on human-annotated datasets, making it feasible to train very large models. This approach democratizes access to AI by lowering the barriers to entry for researchers, developers, and companies. Because self-supervised learning scales efficiently across large datasets, LLMs trained with this method can handle billions or trillions of parameters. This capability makes them suitable for various applications, from simple language tasks to complex decision-making processes.

Autonomous Learning and Continuous Improvement

Recent advancements in self-supervised learning have introduced the concept of Autonomous Learning, where LLMs learn in a loop, similar to how humans continuously learn and refine their understanding.

In autonomous learning, LLMs first go through an "open-book" learning phase, absorbing information from vast datasets. Next, they engage in "closed-book" learning, recalling and reinforcing their understanding without referring to external sources. This iterative process helps the model optimize its understanding, improve performance, and adapt to new tasks over time. Autonomous learning allows LLMs to identify gaps in their knowledge and focus on filling them without human intervention. This self-directed learning makes them more accurate, efficient, and versatile.

Better Generalization and Adaptation

One of the standout benefits of self-supervised learning is the ability of LLMs to generalize across different domains and tasks. LLMs trained with self-supervised learning draw on a wide range of data. They are better equipped to handle various tasks, from generating creative content to providing customer support or technical guidance. They can quickly adapt to new domains or tasks with minimal retraining. This generalization ability makes LLMs more robust and flexible, allowing them to function effectively even when faced with new, unseen data. This adaptability is crucial for applications in fast-evolving fields like healthcare, finance, and technology, where the ability to handle new information quickly can be a significant advantage.

Support for Multimodal Learning

Self-supervised learning principles can extend beyond text to include other data types, such as images and audio. Multimodal learning enables LLMs to handle different forms of data simultaneously, enhancing their ability to generate more comprehensive and accurate content. For example, an LLM could analyze an image, generate a descriptive caption, and provide an audio summary simultaneously. This multimodal capability opens up new opportunities for AI applications in areas like autonomous vehicles, smart homes, and multimedia content creation, where diverse data types must be processed and understood together.

Enhanced Creativity and Problem-Solving

Self-supervised learning empowers LLMs to engage in creative and complex tasks.

Creative Content Generation

LLMs can produce stories, poems, scripts, and other forms of creative content by understanding context, tone, and stylistic nuances. This makes them valuable tools for creative professionals and content marketers.

Advanced Problem-Solving

LLMs trained on diverse datasets can provide novel solutions to complex problems, assisting in medical research, legal analysis, and financial forecasting.

Reduction of Bias and Improved Fairness

Self-supervised learning helps mitigate some biases inherent in smaller, human-annotated datasets. By training on a broad array of data sources, LLMs can learn from various perspectives and experiences, reducing the likelihood of bias resulting from limited data sources. Although self-supervised learning doesn't eliminate bias, the continuous influx of diverse data allows for ongoing adjustments and refinements, promoting fairness and inclusivity in AI applications.

Improved Efficiency in Resource Usage

Self-supervised learning optimizes the use of computational resources. It can directly use raw data instead of extensive preprocessing and manual data cleaning, reducing the time and resources needed to prepare data for training. As learning efficiency improves, these models can be deployed on less powerful hardware, making advanced AI technologies more accessible to a broader audience.

Accelerated Innovation in AI Applications

The benefits of self-supervised learning collectively accelerate innovation across various sectors. LLMs trained with self-supervised learning can analyze medical texts, support diagnosis, and provide insights from vast amounts of unstructured data, aiding healthcare professionals. In the financial sector, LLMs can assist in analyzing market trends, generating reports, automating routine tasks, and enhancing efficiency and decision-making. LLMs can act as personalized tutors, generating tailored content and quizzes that enhance students' learning experiences.

Practical Applications of Self-Supervised Learning in LLMs

Self-supervised learning has enabled LLMs to excel in various practical applications, demonstrating their versatility and power across multiple domains

Virtual Assistants and Chatbots

Virtual assistants and chatbots represent one of the most prominent applications of LLMs trained with self-supervised learning. These models can do the following:

Provide Human-Like Responses

By understanding and predicting language patterns, LLMs deliver natural, context-aware responses in real-time, making them highly effective for customer service, technical support, and personal assistance.

Handle Complex Queries

They can handle complex, multi-turn conversations, understand nuances, detect user intent, and manage diverse topics accurately.

Content Generation and Summarization

LLMs have revolutionized content creation, enabling automated generation of high-quality text for various purposes.

Creative Writing

LLMs can generate engaging content that aligns with specific tone and style requirements, from blogs to marketing copies. This capability reduces the time and effort needed for content production while maintaining quality and consistency. Writers can use LLMs to brainstorm ideas, draft content, and even polish their work by generating multiple variations.

Text Summarization

LLMs can distill lengthy articles, reports, or documents into concise summaries, making information more accessible and easier to consume. This is particularly useful in fields like journalism, education, and law, where large volumes of text need to be synthesized quickly. Summarization algorithms powered by LLMs help professionals keep up with information overload by providing key takeaways and essential insights from long documents.

Domain-Specific Applications

LLMs trained with self-supervised learning have proven their worth in domain-specific applications where understanding complex and specialized content is crucial. LLMs assist in interpreting medical literature, supporting diagnoses, and offering treatment recommendations. Analyzing a wide range of medical texts can provide healthcare professionals with rapid insights into potential drug interactions and treatment protocols based on the latest research. This helps doctors stay current with the vast and ever-expanding medical knowledge.

LLMs analyze market trends in finance, automate routine tasks like report generation, and enhance decision-making processes by providing data-driven insights. They can help with risk assessment, compliance monitoring, and fraud detection by processing massive datasets in real time. This capability reduces the time needed to make informed decisions, ultimately enhancing productivity and accuracy. LLMs can assist with tasks such as contract analysis, legal research, and document review in the legal domain. By understanding legal terminology and context, they can quickly identify relevant clauses, flag potential risks, and provide summaries of lengthy legal documents, significantly reducing the workload for lawyers and paralegals.

How to Implement Self-Supervised Learning for LLMs

Implementing self-supervised learning for LLMs involves several critical steps, from data preparation to model training and fine-tuning. Here's a step-by-step guide to setting up and executing self-supervised learning for training LLMs:

Data Collection and Preparation

Data Collection

Web Scraping

Collect text from websites, forums, blogs, and online articles.

Open Datasets

For medical texts, use publicly available datasets such as Common Crawl, Wikipedia, Project Gutenberg, or specialized corpora like PubMed.

Proprietary Data

Include proprietary or domain-specific data to tailor the model to specific industries or applications, such as legal documents or company-specific communications.

Pre-processing

Tokenization

Convert the text into smaller units called tokens. Tokens may be words, subwords, or characters, depending on the model's architecture.

Normalization

Clean the text by removing special characters, URLs, excessive whitespace, and irrelevant content. If case sensitivity is not essential, standardize the text by converting it to lowercase.

Data Augmentation

Introduce variations in the text, such as paraphrasing or back-translation, to improve the model's robustness and generalization capabilities.

Shuffling and Splitting

Randomly shuffle the data to ensure diversity and divide it into training, validation, and test sets.

Define the Learning Objective

Self-supervised learning requires setting specific learning objectives for the model:

Next-Token Prediction

Set up the primary task of predicting the next word or token in a sequence. Implement "masked language modeling" (MLM), where a certain percentage of input tokens are replaced with a mask token, and the model is trained to predict the original token. This helps the model learn the structure and flow of natural language.

Contrastive Learning (Optional)

Use contrastive learning techniques where the model learns to differentiate between similar and dissimilar examples. For instance, when given a sentence, slightly altered versions are generated, and the model is trained to distinguish the original from the altered versions, enhancing its contextual understanding.

Model Training and Optimization

After preparing the data and defining the learning objectives, proceed to train the model:

Initialize the Model

Start with a suitable architecture, such as a Transformer-based model (e.g., GPT, BERT). Use pre-trained weights to leverage existing knowledge and reduce the required training time if available.

Configure the Learning Process

Set hyperparameters such as learning rate, batch size, and sequence length. Use gradient-based optimization techniques like Adam or Adagrad to minimize the loss function during training.

Use Computational Resources Effectively

Training LLM systems demands a lot of computational resources, including GPUs or TPUs. The training process can be distributed across multiple devices, or cloud-based solutions can handle high processing demands.

Hyperparameter Tuning

Adjust hyperparameters regularly to find the optimal configuration. Experiment with different learning rates, batch sizes, and regularization methods to improve the model's performance.

Evaluation and Fine-Tuning

Once the model is trained, its performance is evaluated and fine-tuned for specific applications. Here is how it works:

Model Evaluation

Use perplexity, accuracy, and loss metrics to evaluate the model's performance. Test the model on a separate validation set to measure its generalization ability to new data.

Fine-Tuning

Refine the model for specific domains or tasks using labeled data or additional unsupervised techniques. Fine-tune a general-purpose LLM on domain-specific datasets to make it more accurate for specialized applications.

Deploy and Monitor

After fine-tuning, deploy the model in a production environment. Continuously monitor its performance and collect feedback to identify areas for further improvement.

Advanced Techniques: Autonomous Learning

To enhance the model further, consider implementing autonomous learning techniques:

Open-Book and Closed-Book Learning

Train the model to first absorb information from datasets ("open-book" learning) and then recall and reinforce this knowledge without referring back to the original data ("closed-book" learning). This process mimics human learning patterns, allowing the model to optimize its understanding continuously.

Self-optimization and Feedback Loops

Incorporate feedback loops where the model evaluates its outputs, identifies errors or gaps, and adjusts its internal parameters accordingly. This self-reinforcing process leads to ongoing performance improvements without requiring additional labeled data.

Ethical Considerations and Bias Mitigation

Implementing self-supervised learning also involves addressing ethical considerations:

Bias Detection and Mitigation

Audit the training data regularly for biases. Use techniques such as counterfactual data augmentation or fairness constraints during training to minimize bias.

Transparency and Accountability

Ensure the model's decision-making processes are transparent. Develop methods to explain the model's outputs and provide users with tools to understand how decisions are made.

Concluding Thoughts

Implementing self-supervised learning for LLMs offers significant benefits, including leveraging massive unlabeled data, enhancing language understanding, improving scalability, and reducing costs. This approach's practical applications span multiple domains, from virtual assistants and chatbots to specialized healthcare, finance, and law uses. By following a systematic approach to data collection, training, optimization, and evaluation, organizations can harness the power of self-supervised learning to build advanced LLMs that are versatile, efficient, and capable of continuous improvement. As this technology continues to evolve, it promises to push the boundaries of what AI can achieve, paving the way for more intelligent, adaptable, and creative systems to better understand and interact with the world around us.

Ready to explore the full potential of LLM?

Our AI-savvy team tackles the latest advancements in self-supervised learning to build smarter, more adaptable AI systems tailored to your needs. Whether you're looking to enhance customer experiences, automate content generation, or revolutionize your industry with innovative AI applications, we've got you covered. Keep your business from falling behind in the digital age. Connect with our team of experts today to discover how our AI-driven strategies can transform your operations and drive sustainable growth. Let's shape the future together — get in touch with Coditude now and take the first step toward a smarter tomorrow!

#AI#artificial intelligence#LLM#transformer architecture#self supervised learning#NLP#Machine Learning#scalability#cost effectiveness#unlabelled data#chatbot#virtual assistants#increased efficiency#data quality

0 notes

Text

How to Choose the Right Machine Learning Course for Your Career

As the demand for machine learning professionals continues to surge, choosing the right machine learning course has become crucial for anyone looking to build a successful career in this field. With countless options available, from free online courses to intensive boot camps and advanced degrees, making the right choice can be overwhelming.

#machine learning course#data scientist#AI engineer#machine learning researcher#eginner machine learning course#advanced machine learning course#Python programming#data analysis#machine learning curriculum#supervised learning#unsupervised learning#deep learning#natural language processing#reinforcement learning#online machine learning course#in-person machine learning course#flexible learning#machine learning certification#Coursera machine learning#edX machine learning#Udacity machine learning#machine learning instructor#course reviews#student testimonials#career support#job placement#networking opportunities#alumni network#machine learning bootcamp#degree program

0 notes

Text

Supervised Learning Vs Unsupervised Learning in Machine Learning

Summary: Supervised learning uses labeled data for predictive tasks, while unsupervised learning explores patterns in unlabeled data. Both methods have unique strengths and applications, making them essential in various machine learning scenarios.

Introduction

Machine learning is a branch of artificial intelligence that focuses on building systems capable of learning from data. In this blog, we explore two fundamental types: supervised learning and unsupervised learning. Understanding the differences between these approaches is crucial for selecting the right method for various applications.

Supervised learning vs unsupervised learning involves contrasting their use of labeled data and the types of problems they solve. This blog aims to provide a clear comparison, highlight their advantages and disadvantages, and guide you in choosing the appropriate technique for your specific needs.

What is Supervised Learning?

Supervised learning is a machine learning approach where a model is trained on labeled data. In this context, labeled data means that each training example comes with an input-output pair.

The model learns to map inputs to the correct outputs based on this training. The goal of supervised learning is to enable the model to make accurate predictions or classifications on new, unseen data.

Key Characteristics and Features

Supervised learning has several defining characteristics:

Labeled Data: The model is trained using data that includes both the input features and the corresponding output labels.

Training Process: The algorithm iteratively adjusts its parameters to minimize the difference between its predictions and the actual labels.

Predictive Accuracy: The success of a supervised learning model is measured by its ability to predict the correct label for new, unseen data.

Types of Supervised Learning Algorithms

There are two primary types of supervised learning algorithms:

Regression: This type of algorithm is used when the output is a continuous value. For example, predicting house prices based on features like location, size, and age. Common algorithms include linear regression, decision trees, and support vector regression.

Classification: Classification algorithms are used when the output is a discrete label. These algorithms are designed to categorize data into predefined classes. For instance, spam detection in emails, where the output is either "spam" or "not spam." Popular classification algorithms include logistic regression, k-nearest neighbors, and support vector machines.

Examples of Supervised Learning Applications

Supervised learning is widely used in various fields:

Image Recognition: Identifying objects or people in images, such as facial recognition systems.

Natural Language Processing (NLP): Sentiment analysis, where the model classifies the sentiment of text as positive, negative, or neutral.

Medical Diagnosis: Predicting diseases based on patient data, like classifying whether a tumor is malignant or benign.

Supervised learning is essential for tasks that require accurate predictions or classifications, making it a cornerstone of many machine learning applications.

What is Unsupervised Learning?

Unsupervised learning is a type of machine learning where the algorithm learns patterns from unlabelled data. Unlike supervised learning, there is no target or outcome variable to guide the learning process. Instead, the algorithm identifies underlying structures within the data, allowing it to make sense of the data's hidden patterns and relationships without prior knowledge.

Key Characteristics and Features

Unsupervised learning is characterized by its ability to work with unlabelled data, making it valuable in scenarios where labeling data is impractical or expensive. The primary goal is to explore the data and discover patterns, groupings, or associations.

Unsupervised learning can handle a wide variety of data types and is often used for exploratory data analysis. It helps in reducing data dimensionality and improving data visualization, making complex datasets easier to understand and analyze.

Types of Unsupervised Learning Algorithms

Clustering: Clustering algorithms group similar data points together based on their features. Popular clustering techniques include K-means, hierarchical clustering, and DBSCAN. These methods are used to identify natural groupings in data, such as customer segments in marketing.

Association: Association algorithms find rules that describe relationships between variables in large datasets. The most well-known association algorithm is the Apriori algorithm, often used for market basket analysis to discover patterns in consumer purchase behavior.

Dimensionality Reduction: Techniques like Principal Component Analysis (PCA) and t-Distributed Stochastic Neighbor Embedding (t-SNE) reduce the number of features in a dataset while retaining its essential information. This helps in simplifying models and reducing computational costs.

Examples of Unsupervised Learning Applications

Unsupervised learning is widely used in various fields. In marketing, it segments customers based on purchasing behavior, allowing personalized marketing strategies. In biology, it helps in clustering genes with similar expression patterns, aiding in the understanding of genetic functions.

Additionally, unsupervised learning is used in anomaly detection, where it identifies unusual patterns in data that could indicate fraud or errors.

This approach's flexibility and exploratory nature make unsupervised learning a powerful tool in data science and machine learning.

Advantages and Disadvantages

Understanding the strengths and weaknesses of both supervised and unsupervised learning is crucial for selecting the right approach for a given task. Each method offers unique benefits and challenges, making them suitable for different types of data and objectives.

Supervised Learning

Pros: Supervised learning offers high accuracy and interpretability, making it a preferred choice for many applications. It involves training a model using labeled data, where the desired output is known. This enables the model to learn the mapping from input to output, which is crucial for tasks like classification and regression.

The interpretability of supervised models, especially simpler ones like decision trees, allows for better understanding and trust in the results. Additionally, supervised learning models can be highly efficient, especially when dealing with structured data and clearly defined outcomes.

Cons: One significant drawback of supervised learning is the requirement for labeled data. Gathering and labeling data can be time-consuming and expensive, especially for large datasets.

Moreover, supervised models are prone to overfitting, where the model performs well on training data but fails to generalize to new, unseen data. This occurs when the model becomes too complex and starts learning noise or irrelevant patterns in the training data. Overfitting can lead to poor model performance and reduced predictive accuracy.

Unsupervised Learning

Pros: Unsupervised learning does not require labeled data, making it a valuable tool for exploratory data analysis. It is particularly useful in scenarios where the goal is to discover hidden patterns or groupings within data, such as clustering similar items or identifying associations.

This approach can reveal insights that may not be apparent through supervised learning methods. Unsupervised learning is often used in market segmentation, customer profiling, and anomaly detection.

Cons: However, unsupervised learning typically offers less accuracy compared to supervised learning, as there is no guidance from labeled data. Evaluating the results of unsupervised learning can also be challenging, as there is no clear metric to measure the quality of the output.

The lack of labeled data means that interpreting the results requires more effort and domain expertise, making it difficult to assess the effectiveness of the model.

Frequently Asked Questions

What is the main difference between supervised learning and unsupervised learning?

Supervised learning uses labeled data to train models, allowing them to predict outcomes based on input data. Unsupervised learning, on the other hand, works with unlabeled data to discover patterns and relationships without predefined outputs.

Which is better for clustering tasks: supervised or unsupervised learning?

Unsupervised learning is better suited for clustering tasks because it can identify and group similar data points without predefined labels. Techniques like K-means and hierarchical clustering are commonly used for such purposes.

Can supervised learning be used for anomaly detection?

Yes, supervised learning can be used for anomaly detection, particularly when labeled data is available. However, unsupervised learning is often preferred in cases where anomalies are not predefined, allowing the model to identify unusual patterns autonomously.

Conclusion

Supervised learning and unsupervised learning are fundamental approaches in machine learning, each with distinct advantages and limitations. Supervised learning excels in predictive accuracy with labeled data, making it ideal for tasks like classification and regression.

Unsupervised learning, meanwhile, uncovers hidden patterns in unlabeled data, offering valuable insights in clustering and association tasks. Choosing the right method depends on the nature of the data and the specific objectives.

#Supervised Learning Vs Unsupervised Learning in Machine Learning#Supervised Learning Vs Unsupervised Learning#Supervised Learning#Unsupervised Learning#Machine Learning#ML#AI#Artificial Intelligence

0 notes

Text

0 notes

Photo

A No-nonsense Approach to Deep Learning, LLM, Supervised Learning, Generative AI, and Everything in Between

This is a short preview of the article: With this post I will share a few resources freely available in the internet that I believe can serve as an entry point for understanding the world around AI in a no-nonsense manner. The domain is relatively vast and we will cover topics like Deep Learning, Large Language Models, Supervised

If you like it consider checking out the full version of the post at: A No-nonsense Approach to Deep Learning, LLM, Supervised Learning, Generative AI, and Everything in Between

If you are looking for ideas for tweet or re-blog this post you may want to consider the following hashtags:

Hashtags: #DeepLearning, #GenerativeAI, #LLM, #SuperviseLearning

The Hashtags of the Categories are: #BigData, #MachineLearning

A No-nonsense Approach to Deep Learning, LLM, Supervised Learning, Generative AI, and Everything in Between is available at the following link: https://francescolelli.info/big-data/a-no-nonsense-approach-to-deep-learning-llm-supervise-learning-generative-ai-and-everything-in-between/ You will find more information, stories, examples, data, opinions and scientific papers as part of a collection of articles about Information Management, Computer Science, Economics, Finance and More.

The title of the full article is: A No-nonsense Approach to Deep Learning, LLM, Supervised Learning, Generative AI, and Everything in Between

It belong to the following categories: Big Data, Machine Learning

The most relevant keywords are: deep Learning, Generative AI, LLM, Supervise Learning

It has been published by Francesco Lelli at Francesco Lelli a blog about Information Management, Computer Science, Finance, Economics and nearby ideas and opinions

With this post I will share a few resources freely available in the internet that I believe can serve as an entry point for understanding the world around AI in a no-nonsense manner. The domain is relatively vast and we will cover topics like Deep Learning, Large Language Models, Supervised

Hope you will find it interesting and that it will help you in your journey

With this post I will share a few resources freely available in the internet that I believe can serve as an entry point for understanding the world around AI in a no-nonsense manner. The domain is relatively vast and we will cover topics like Deep Learning, Large Language Models, Supervised Learning, Generative AI, and a…

0 notes

Text

Define machine learning: 5 machine learning types to know

Machine learning (ML) can be used in computer vision, large language models (LLMs), speech recognition, self-driving cars, and many more use cases to make decisions in healthcare, human resources, finance, and other areas.

However, ML’s rise is complicated. ML validation and training datasets are generally aggregated by humans, who are biased and error-prone. Even if an ML model isn’t biased or erroneous, using it incorrectly can cause harm.

Diversifying enterprise AI and ML usage can help preserve a competitive edge. Distinct ML algorithms have distinct benefits and capabilities that teams can use for different jobs. IBM will cover the five main categories and their uses.

Define machine learning

ML is a computer science, data science, and AI subset that lets computers learn and improve from data without programming.

ML models optimize performance utilizing algorithms and statistical models that deploy jobs based on data patterns and inferences. Thus, ML predicts an output using input data and updates outputs as new data becomes available.

Machine learning algorithms recommend products based on purchasing history on retail websites. IBM, Amazon, Google, Meta, and Netflix use ANNs to make tailored suggestions on their e-commerce platforms. Retailers utilize chat bots, virtual assistants, ML, and NLP to automate shopping experiences.

Machine learning types

Supervised, unsupervised, semi-supervised, self-supervised, and reinforcement machine learning algorithms exist.

1.Supervised machine learning

Supervised machine learning trains the model on a labeled dataset with the target or outcome variable known. Data scientists constructing a tornado predicting model might enter date, location, temperature, wind flow patterns, and more, and the output would be the actual tornado activity for those days.

Several algorithms are employed in supervised learning for risk assessment, image identification, predictive analytics, and fraud detection.

Regression algorithms predict output values by discovering linear correlations between actual or continuous quantities (e.g., income, temperature). Regression methods include linear regression, random forest, gradient boosting, and others.

Labeling input data allows classification algorithms to predict categorical output variables (e.g., “junk” or “not junk”). Logistic regression, k-nearest neighbors, and SVMs are classification algorithms.

Naïve Bayes classifiers enable huge dataset classification. They’re part of generative learning algorithms that model class or category input distribution. Decision trees in Naïve Bayes algorithms support regression and classification techniques.

Neural networks, with many linked processing nodes, replicate the human brain and can do natural language translation, picture recognition, speech recognition, and image generation.

Random forest methods combine decision tree results to predict a value or category.

2. Unsupervised machine learning

Apriori, Gaussian Mixture Models (GMMs), and principal component analysis (PCA) use unlabeled datasets to make inferences, enabling exploratory data analysis, pattern detection, and predictive modeling.

Cluster analysis is the most frequent unsupervised learning method, which groups data points by value similarity for customer segmentation and anomaly detection. Association algorithms help data scientists visualize and reduce dimensionality by identifying associations between data objects in huge databases.

K-means clustering organizes data points by size and granularity, clustering those closest to a centroid under the same category. Market, document, picture, and compression segmentation use K-means clustering.

Hierarchical clustering includes agglomerative clustering, where data points are isolated into groups and then merged iteratively based on similarity until one cluster remains, and divisive clustering, where a single data cluster is divided by data point differences.

Probabilistic clustering group’s data points by distribution likelihood to tackle density estimation or “soft” clustering problems.

Often, unsupervised ML models power “customers who bought this also bought…” recommendation systems.

3. Self-supervised machine learning

Self-supervised learning (SSL) lets models train on unlabeled data instead of enormous annotated and labeled datasets. SSL algorithms, also known as predictive or pretext learning algorithms automatically classify and solve unsupervised problems by learning one portion of the input from another. Computer vision and NLP require enormous amounts of labeled training data to train models, making these methods usable.

4. Reinforcement learning

Dynamic programming dubbed reinforcement learning from human feedback (RLHF) trains algorithms using reward and punishment. To use reinforcement learning, an agent acts in a given environment to achieve a goal. The agent is rewarded or penalized based on a measure (usually points) to encourage good behavior and discourage negative behavior. Repetition teaches the agent the optimum methods.

Video games often use reinforcement learning techniques to teach robots human tasks.

5. Semi-supervised learning

The fifth machine learning method combines supervised and unsupervised learning.

Semi-supervised learning algorithms learn from a small labeled dataset and a large unlabeled dataset because the labeled data guides the learning process. A semi-supervised learning algorithm may find data clusters using unsupervised learning and label them using supervised learning.

Semi-supervised machine learning uses generative adversarial networks (GANs) to produce unlabeled data by training two neural networks.

ML models can gain insights from company data, but their vulnerability to human/data bias makes ethical AI practices essential.

Manage multiple ML models with watstonx.ai.

Whether they employ AI or not, most people use machine learning, from developers to users to regulators. Adoption of ML technology is rising. Global machine learning market was USD 19 billion in 2022 and is predicted to reach USD 188 billion by 2030 (a CAGR of almost 37%).

The size of ML usage and its expanding business effect make understanding AI and ML technologies a key commitment that requires continuous monitoring and appropriate adjustments as technologies improve. IBM Watsonx.AI Studio simplifies ML algorithm and process management for developers.

IBM Watsonx.ai, part of the IBM Watsonx AI and data platform, leverages generative AI and a modern business studio to train, validate, tune, and deploy AI models faster and with less data. Advanced data production and classification features from Watsonx.ai enable enterprises optimize real-world AI performance with data insights.

In the age of data explosion, AI and machine learning are essential to corporate operations, tech innovation, and competition. However, as new pillars of modern society, they offer an opportunity to diversify company IT infrastructures and create technologies that help enterprises and their customers.

Read more on Govindhtech.com

#technology#govindhtech#technews#news#ai#machine learning#Reinforcement learning#Semi-supervised learning#Supervised machine learning

0 notes